Patterns across the landscape

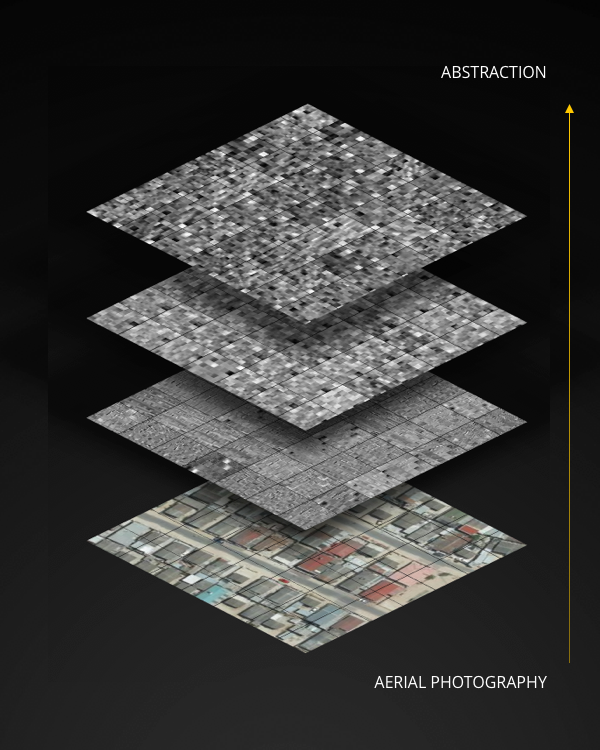

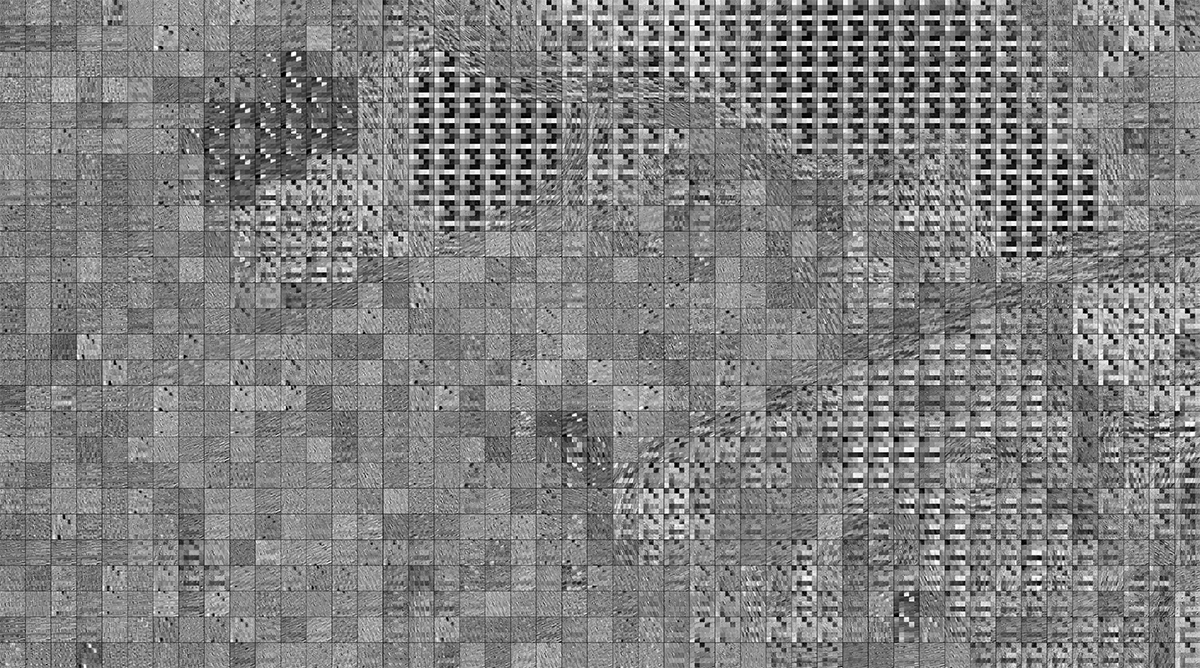

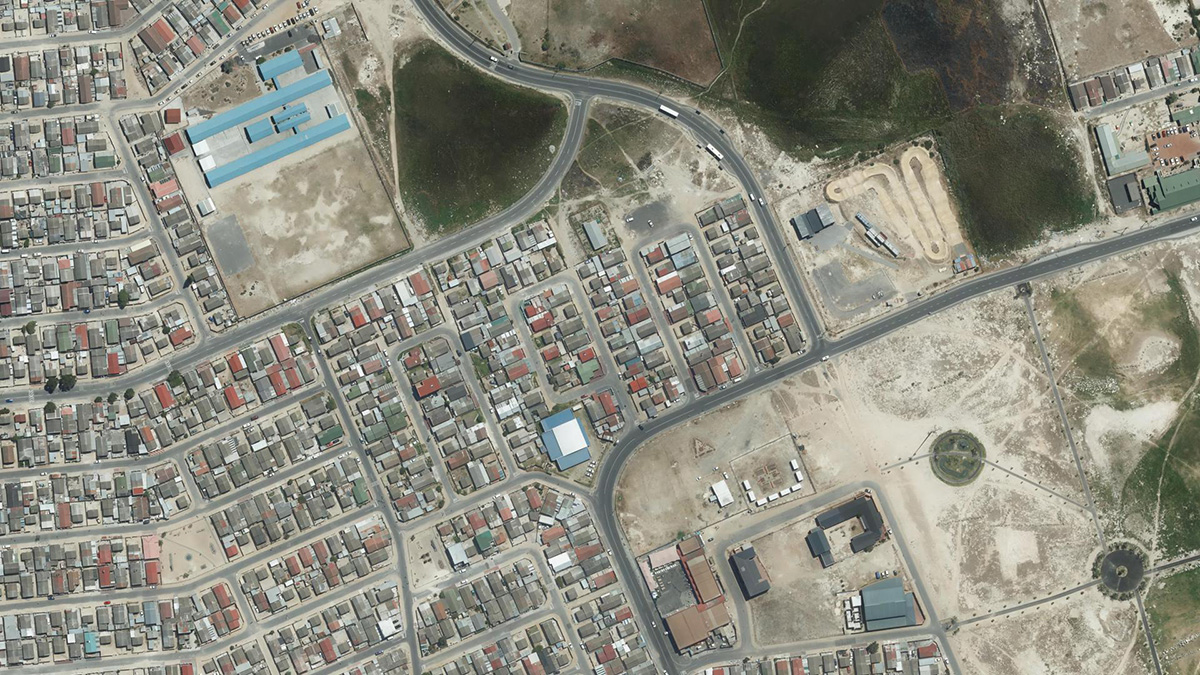

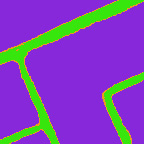

Below we can see the original aerial imagery, and compare it to a sequence of feature maps generated from a well trained machine learning model. As humans we can observe the patterns within the feature maps that are used by the model to classify things within the aerial imagery.

The convolutional neural networks (ConvNets) build these patterns to look for similarity or difference from a known set of image data labelled as road or non-road. These patterns are developed in the convolution layers of the neural network applying computational techniques to recognise features within the input imagery.

For this imagery we have extracted these patterns and arranged them to match the source landscape: we can see the original aerial imagery as input and compare it to the grouped feature maps from each convolutional block of the well-trained ConvNets model.

TECHNICAL NOTE

This ConvNets model consists of 3 convolutional blocks with an softmax layer as a classifier at the output. Each convolutional block has one convolutional layer, one relu layer and one max pooling layer. Each convolutional layer contains several kernels (or filters) to perform the convolutional computing on the block input.

Classifying the landscape

The end goal is for the process to learn to classify the aerial imagery: as either being road or non-road. The machine learning process uses the patterns (kernels) generated as part of its internal processes to filter, sort & classify the input imagery.

A classification (as separate categories road & non-road) is returned as a number between 0 - 1 to indicate the certainty of the result. A value approaching 1 confirms high certainty in the result, whereas a value like .45 has less confidence in what has been detected.

Below shows a series of sample pixels across the landscape and their associated classification results.

Learning through iteration

To train the system to classify - the system needs training data: imagery that has known labels (or classifications). The software engineer works iteratively to coordinate this data, parameters in the machine learning system, and reviewing classification results.

Data used to train the ConvNets model is critical for the final classification accuracy. The higher quality of the labelled data is, the better performance the model can achieve.

In the absence of existing labelled data we used an alternative approach to generate training data as illustrated below.

This dataset is later divided into two parts, 75% for training and 25% for valuation. The training data is used to optimize the ConvNets model (kernels, weights and biases), and the validation data is used to evaluate the training performance to avoid the overfitting issue. We want the model to “learn” from the training images instead of memorizing all the images with labels.

When the model’s performance (measured by prediction accuracy) meets expectations - the training is halted. We can then use some new aerial images (also labelled) to test how well it works.

Training & Testing Demonstration

The following demonstration simulates the training and testing process of our model: toggle between training & testing phases, and when testing select different locations to see the different classification results.

All samples are known & labelled as either Road or Non-road. These samples - the training data - are selected from a fixed area, or subset, of the overall aerial imagery.

Through iterative testing and evaluation the model is adjusted to give accurate results relative to the known & labelled sample data. An acceptable tolerance of Low certainty results are retained for further inspection by expert.

Results

Applying the model to each pixel of the aerial photography reveals an image of landscape with more or less certainty (a pixel-wise probability map) regards to whether the pixel is road or non-road.

After applying the model on every pixel of the input aerial images, further image processing techniques (noise removal and image sharpening) can be applied on the output images to remove the isolated points and groupings (e.g. elements not associated with the road network etc.)

Outcomes

By using the machine learning techniques to build a road surface classifier (ConvNets Model), the calculation time of the road surface area is significantly reduced compared with the manual approaches. Moreover the model can provide a higher level of accuracy, robustness and confidence, when it is trained on a high quality dataset.

Outcomes included:

- Machine learning tools to support expert survey requirements

- Image processing techniques and machine learning process for building ConvNets model

- New cost-efficient workflow of road surface estimation

- Additional services and outputs can be argmented withthis automated and data-driven approach to help clients understand their asset portfolio.

Project Credits

We appreciate the client's support for the opportunity to develop this project.

Slaven Marusic, Digital Insights Leader

Caihao Cui (Chris)

Greg More

Project team

Chris von Holdt, Kevin Johnson, Peter Wilson, Cheryl Beuster, Julian Hendricks, Hosna Tashakkori, Mohammad Ghasab, Steven Haslemore

Click here for further project details and contact information.